4 of the best technical SEO tools

If you’re looking to level up your SEO efforts, having the right tools is essential.

This article explores four of the best technical SEO tools today:

- Screaming Frog.

- Ahrefs.

- URL Profiler.

- GTMetrix.

Each tool offers unique capabilities to help optimize website performance, visibility and user experience.

1. Screaming Frog SEO Spider

Top-line overview

- Desktop-based tool.

- Cost-effective.

- Produces vast quantities of data.

- Steeper learning curve.

- Limited to technical SEO findings only.

Detail and usage

A long-time staple for technical SEO specialists, Screaming Frog is a cost-effective tool that can be downloaded and executed from a desktop environment.

As with most desktop-based tools, Screaming Frog uses your workstation’s processing power and your network’s bandwidth. There is no need for a cloud platform, making it cost-effective.

The paid version of the tool (highly recommended) costs only $259 per year, whereas many cloud-based alternatives cost this much per month.

The tool crawls websites and produces data for technical SEO analysis, making Screaming Frog one of the most powerful desktop solutions.

It provides a large amount of valuable data at a low cost, so many agencies use it and maintain at least one or two paid licenses unless they have their own crawling solutions.

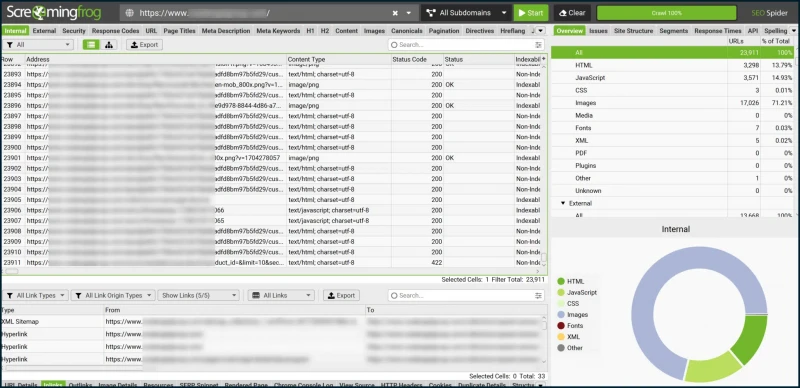

Within the main interface, you’ll find a large table of internal-all crawl data by default. Tabs along the top of the main table allow you to isolate key areas of the site’s code.

Screaming Frog can easily crawl tens to hundreds of thousands of URLs. However, you should be aware that your workstation’s specs and network speed may influence the speed of crawling or the total feasible size of your crawl.

Running Screaming Frog on a machine with high memory (16GB–32GB RAM at the least) is recommended if you crawl larger sites (particularly relevant for ecommerce stores).

To mitigate RAM consumption, you can either opt to:

- Alter Screaming Frog to run in database mode (replaces standard crawl files with a centralized database on your system).

- Run it headless via command prompt.

With the command line-driven, headless execution option, you can also explore running Screaming Frog from a cloud environment. It is extremely flexible and can be automated via in-built scheduling facilities.

Another thing to remember is that Screaming Frog can be customized to crawl non-standard data via XPath integration.

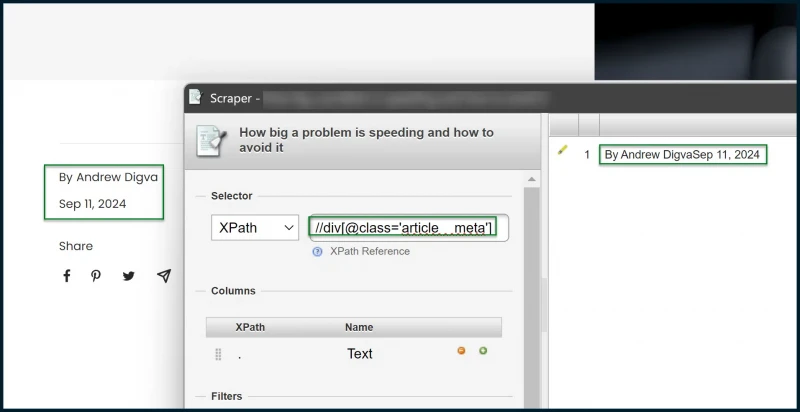

If you are crawling a site with flat URL architecture, you may have issues separating URLs by content type. (For example, individual blog posts where /blog/ or /news/ are not in the URL string.)

Using a Chrome extension like Scraper, you could isolate an element that only appears on an individual blog post (e.g., post meta):

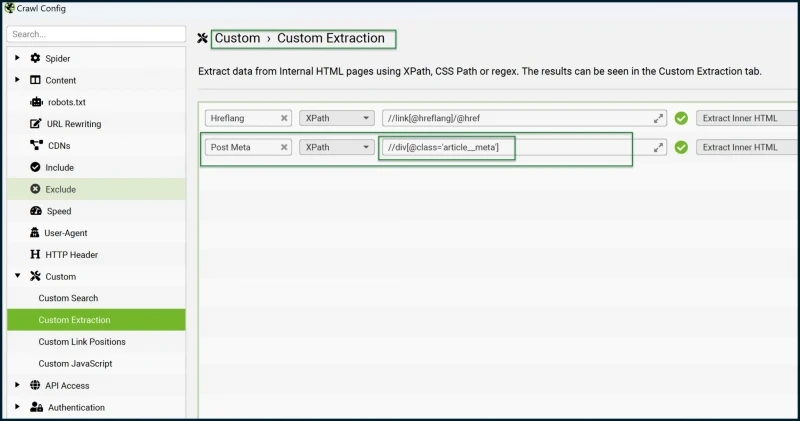

From here, you can take the successfully created XPath expression and plug it into Screaming Frog:

Coding of different sites will use different paths to isolate post meta.

Once you have the correct XPath expression plugged in, you’ll see the returned post meta for each blog post. Pages that are not individual blog posts will return an empty cell, thus allowing you to separate the data.

This can also be useful for content performance analysis, as you can extract the date when each post was published.

This lets you divide each post’s clicks/sessions (from Search Console or GA4) by the number of days since publication to evaluate content performance more fairly (otherwise, older posts always seem to be the top performers).

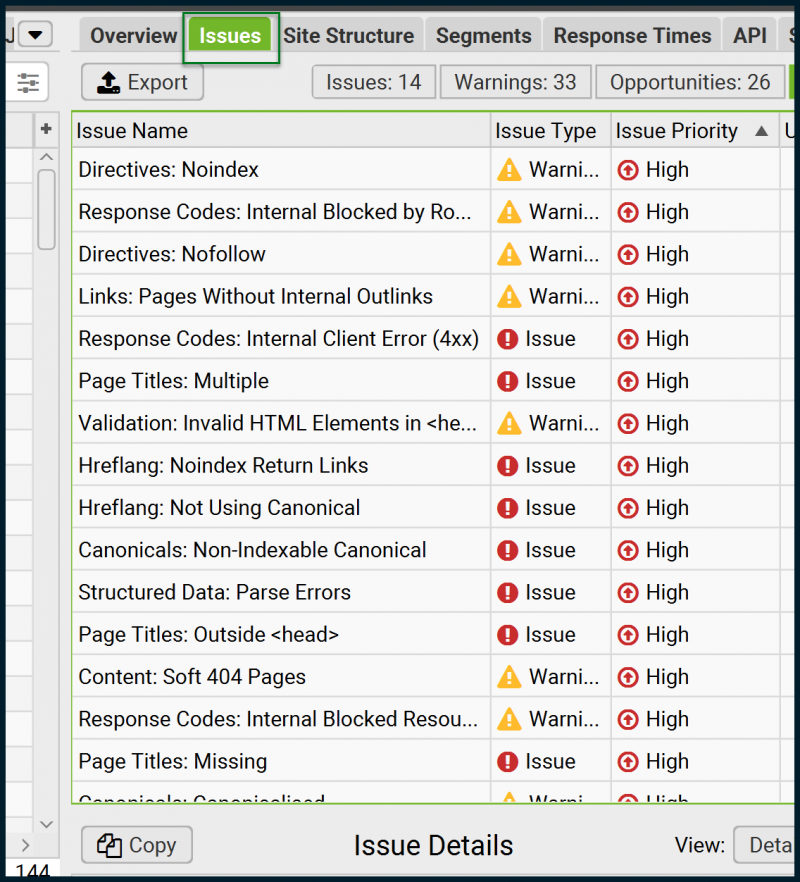

Screaming Frog also has an issues tab, which attempts to highlight problems with the crawled website:

This feature is useful, but Screaming Frog’s main purpose is to provide data for you to analyze manually. Therefore, don’t rely on this section for perfect accuracy.

The tool focuses on delivering cost-effective and accurate data rather than offering guided support. To fully benefit from Screaming Frog, you should be comfortable working with spreadsheets.

Some exports from Screaming Frog can generate millions of rows of data. For instance, crawling about 200,000 addresses can produce many more internal links than addresses.

Since each webpage can link to others multiple times and receive links from various pages, this data can become very large and often exceeds Excel’s row limit.

The same goes for Google Sheets, where the total cell limit is consumed much more quickly. In such a scenario, you may wish to become familiar with a large-scale CSV handling solution, such as Delimit by Delimitware or ModernCSV.

These relatively cheap products can help you to open millions of rows of data (if you export from Screaming Frog in CSV format) in seconds. You can then filter and reduce the data, preparing it for full handling in Excel.

2. Ahrefs

Top-line overview

- Cloud-based platform.

- Expensive (but worth it).

- Accurate data, and masses of it.

- More user-friendly (when compared with Screaming Frog).

- Can be used for technical SEO, keyword research and backlink analysis.

Detail and usage

Screaming Frog primarily generates data without contextualizing it into actionable insights, focusing solely on technical SEO crawling. As a result, it lacks keyword and backlink data. This is where Ahrefs comes in.

Ahrefs is a cloud-based platform that includes a robust technical SEO site crawler. Among the cloud-based SEO tools, Ahrefs’ “Site Audit” is one of the most effective.

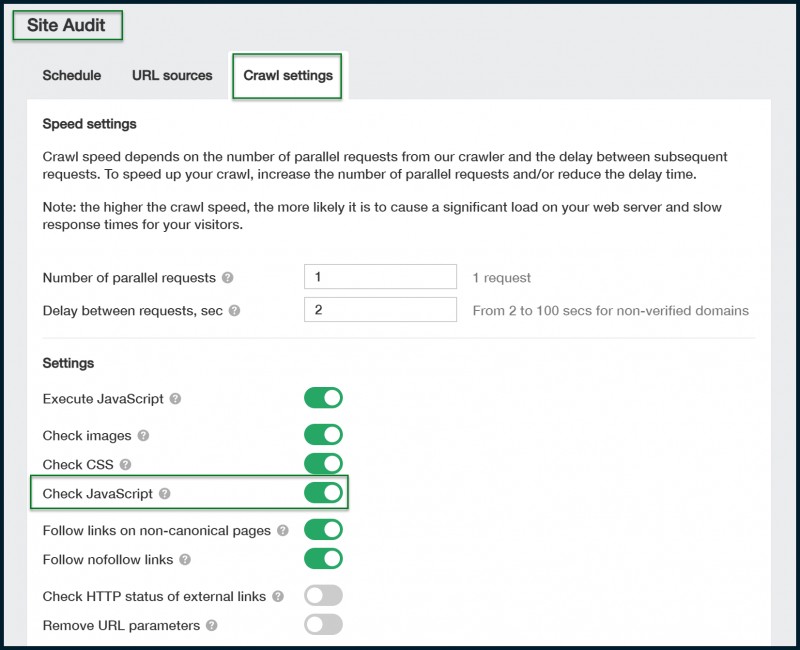

When JavaScript crawling is enabled in Ahrefs’ Site Audit, it successfully gathers information even from sites that heavily utilize JavaScript, such as single-page applications.

I’ve observed Ahrefs retrieving data from JavaScript-generated elements that Screaming Frog might overlook, even when JavaScript rendering is activated.

Above, be sure to toggle on JavaScript crawling. Executing JavaScript can be time-intensive, so plan ahead and leave enough time for your crawl.

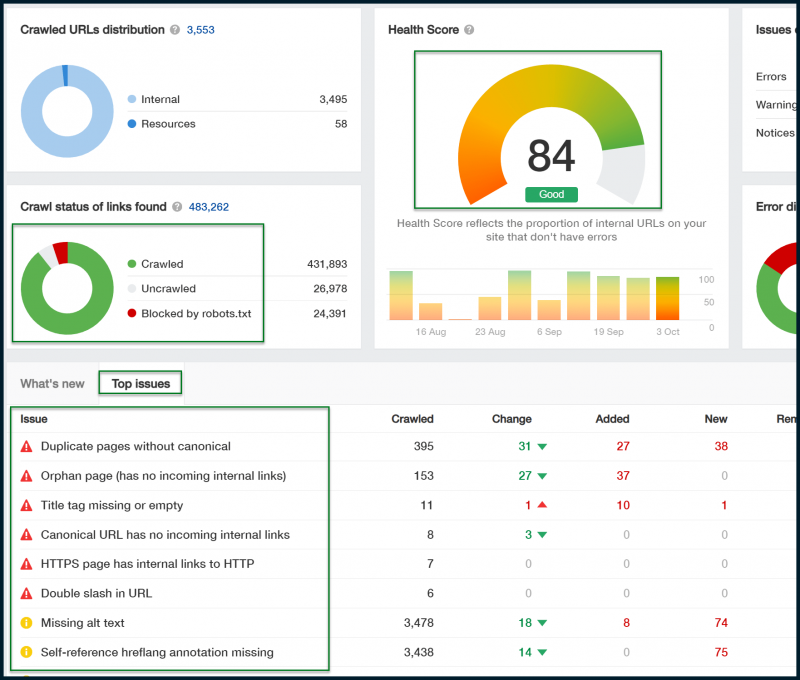

Ahrefs does much more in terms of giving a broad snapshot of your technical SEO performance:

You can click on each issue to explore it further, and there’s always a “Why and how to fix” link that provides additional details about the solution.

If you prefer raw data, go to Page Explorer, where you can customize the displayed columns and export the data.

While the output isn’t quite as detailed as Screaming Frog’s, it comes close.

With just a few minutes of customization, you can create a table that resembles Screaming Frog’s export format.

3. URL Profiler

Top-line overview

- Desktop-based tool.

- Cost-effective (if you already have API keys for other platforms).

- Single purpose in terms of utilization.

- Helps to automate the fetching of authority metrics.

Detail and usage

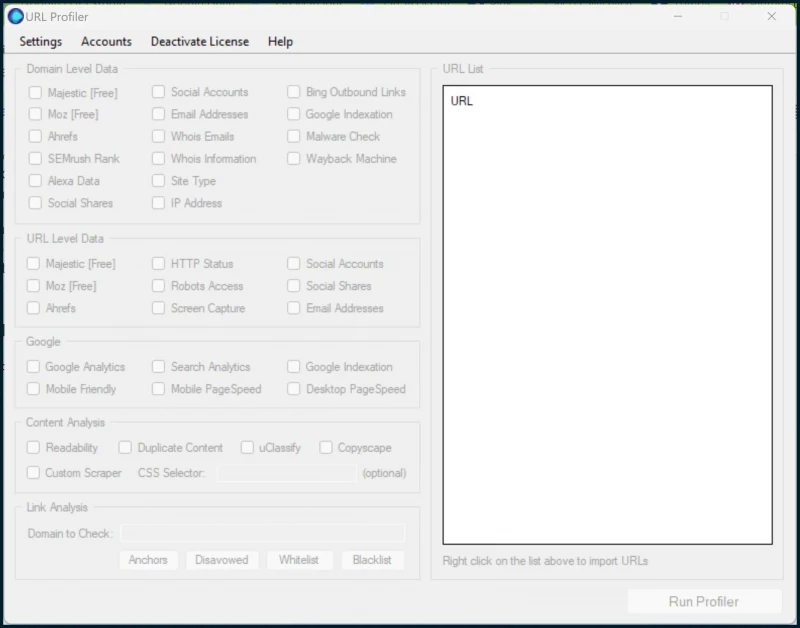

If you’re working with vast quantities of backlink data, you might want to check out URL Profiler:

This tool lets you input API keys from various platforms, such as Majestic, Ahrefs and Moz, provided you have the necessary subscriptions.

This feature is beneficial if you have a lengthy list of pages – potentially thousands – and need to assess metrics for each one to determine its value.

You might use it to evaluate internal page authority after crawling a client’s site with Screaming Frog or to assess the strength of potential link opportunities.

Ahrefs offers a “Batch Analysis” tool to return metrics for up to 500 URLs (Batch Analysis version 2.0).

However, if you need to analyze a larger number of URLs, you’ll only receive Ahrefs metrics for the ones you input.

In such cases, having a tool like URL Profiler is advantageous for managing larger projects, especially when you have access to multiple API keys from various SEO platforms.

Another alternative to URL Profiler is Netpeak Checker, which provides similar functionality.

4. GTMetrix

Top-line overview

- Cloud-based tool.

- Free to use.

- Helps to identify page speed issues.

- Useful to use alongside Page Speed Insights and Chrome Dev Tools.

Detail and usage

Most SEOs use Page Speed Insights to analyze page speed and Core Web Vitals. However, GTMetrix can also be beneficial in certain situations.

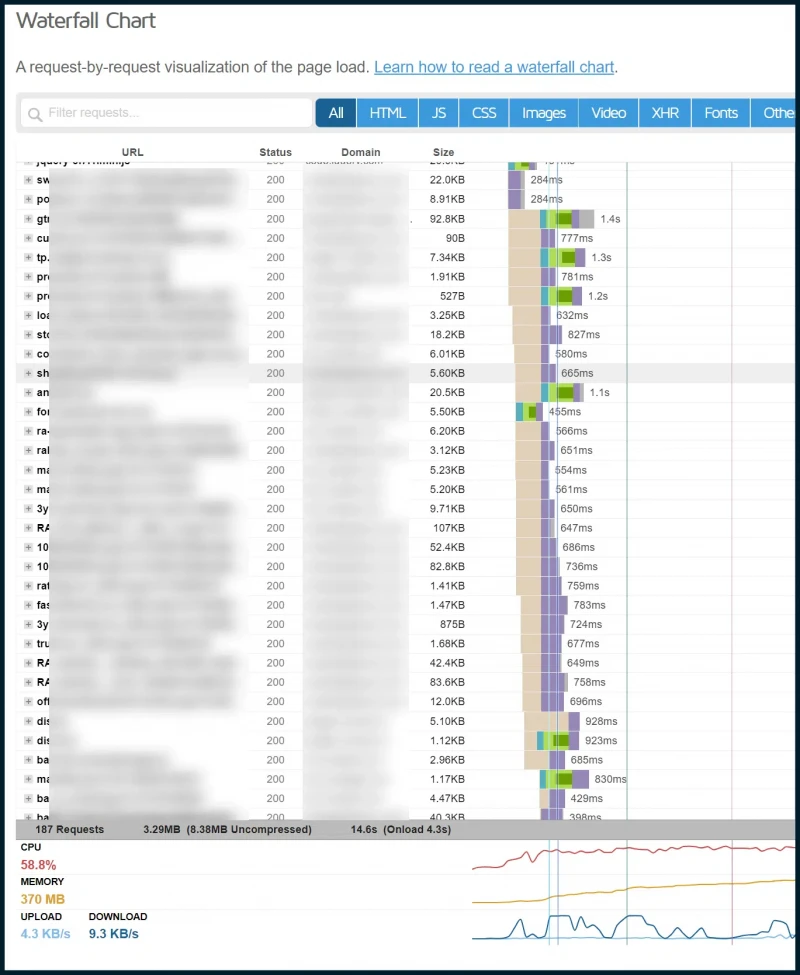

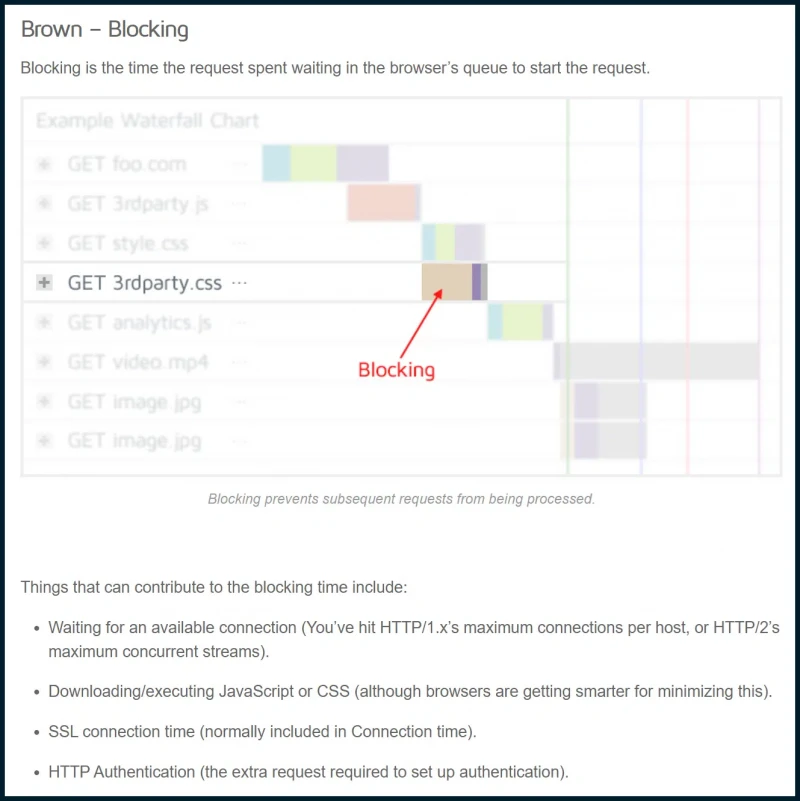

By signing up for a free account, you gain access to useful features like the waterfall chart, which provides valuable insights into your website’s performance.

Above, you can observe a page that pulls in a tremendous volume of resources (JavaScript, CSS, images, etc.). A brown bar visually represents much of the loading time.

We can quickly determine that exceeding HTTP/2’s maximum concurrent streams will likely be a factor.

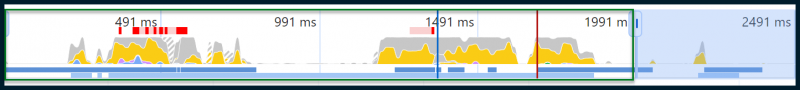

Most of the resources were images rather than JS modules. Let’s check this out using the performance tab of Chrome Dev Tools:

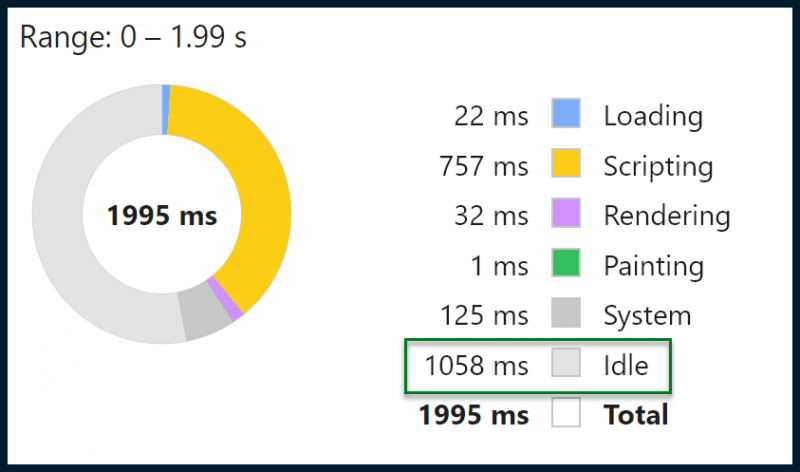

The main page load occurs between 0 ms and 2 seconds:

As you can see, there is a significant amount of “Idle” time, indicating periods when the user’s CPU or GPU is not actively rendering the page.

This is likely due to the browser waiting for the server to respond to resource requests (e.g., images), suggesting that the maximum number of HTTP/2 concurrent streams may be exceeded. These findings can be relayed to a web developer for further action.

By using GTMetrix alongside Chrome Dev Tools, you can quickly and easily identify these issues.