Want to improve rankings and traffic? Stop blindly following SEO tool recommendations

SEO tools can be invaluable for optimizing your site – but if you blindly follow every recommendation they spit out, you may be doing more harm than good.

Let’s explore the biggest pitfalls of SEO tools and how to use them to genuinely benefit your site.

Why blindly following SEO tools can harm your site

SEO tools are a double-edged sword for anyone involved in content creation or digital marketing.

On the one hand, they offer valuable insights that can guide your strategy, from keyword opportunities to technical optimizations. On the other hand, blindly following their recommendations can lead to serious problems.

Overoptimized content, cosmetic reporting metrics and incorrect technical advice are just some pitfalls of overreliance on SEO tools.

Worse yet, when site owners mistakenly try to optimize for these tool-specific metrics. This is something Google’s John Mueller specifically commented on recently when urging bloggers not to take shortcuts with their SEO:

- “Many SEO tools have their own metrics that are tempting to optimize for (because you see a number), but ultimately, there’s no shortcut.”

I’ve worked with thousands of sites and have seen firsthand the damage that can be done when SEO tools are misused. My goal is to prevent that same damage from befalling you!

This article details some of the worst recommendations from these tools based on my own experience – recommendations that not only contradict SEO best practices but can also harm your site’s performance.

The discussion will cover more than just popular tool deficiencies. We’ll also explore how to use these tools correctly, making them a complement to your overall strategy rather than a crutch.

Finally, I’ll break down the common traps to avoid – like over-relying on automated suggestions or using data without proper context – so you can stay clear of the issues that often derail SEO efforts.

By the end, you’ll have a clear understanding of how to get the most out of your SEO tools without falling victim to their limitations.

SEO tools never provide the full picture to bloggers

Without fail, I receive at least one panicked email a week from a blogger reporting a traffic drop. The conversation usually goes something like this:

- Blogger: “Casey, my traffic is down 25% and I’m panicking here.”

- Me: “Sorry to hear this. Can you tell me where you saw the drop? Are you looking in Google Search Console? Google Analytics? A blog analytics dashboard? Where do you see the drop?”

- Blogger: “Uh, no. I’m looking at the Visibility Graph in [Insert SEO Tool Name here] and it’s showing a noticeable decline!”

This is a common response. I’ve gotten the same email from both novice and experienced bloggers.

The issue is one of education. Visibility tools, in general, are horribly unreliable.

These tools track a subset of keyword rankings as an aggregate, using best-guess traffic volume numbers, third-party clickstream data and their own proprietary algorithms.

The result: these tools tend to conflate all keyword rankings into one visibility number!

That’s a problem if you suddenly lose a ton of keywords in, for example, positions 50-100, which lowers the overall visibility number for the entire domain.

It’s likely those 50-100+ position keywords were not sending quality traffic in the first place. But because the blogger lost them, the visibility index has decreased, and boom, it looks like they suffered a noticeable traffic drop!

Plenty of visibility tools and metrics exist in the SEO space, and many have value. They can and should be deployed quickly to pinpoint where actual SEO research should come into play when diagnosing problems.

But as SEOs, we educate clients that these same tools should never be the final authority on matters as important as traffic drops or troubleshooting possible SEO issues.

When forming solid hypotheses and recommended action items, always prioritize first-party data in Google Analytics, Google Search Console, etc.

Questionable SEO tool recommendations from recent experience

It’s not just these “visibility metrics” that give tools a bad name.

Many of the most popular tools available in the niche provide outdated metrics that have been debunked as a waste of time for SEO priority purposes.

One of those metrics is the popular text-to-HTML ratio metric.

Briefly defined, the metric compares the amount of text on the page to the HTML code required to display it.

This is usually expressed as a percentage, with a “higher” percentage being preferred, as that signifies more text in relation to the code.

Even though this has been repeatedly denied as a ranking factor this is still a reported audit finding on most crawling programs and popular SEO tool suites.

The same can also be said when discussing the topic of toxic links and disavow files.

Yet, Google has publicly communicated multiple times that toxic links are great for selling tools and that you would be wise to ignore such reports as they do nothing for you.

I can only speak to my experience, but I’ve only ever improved sites by removing disavow files.

Unless you actually have a links-based manual penalty that requires you to disavow links (you shouldn’t have gotten them in the first place), you should stay away from these files as well.

Finally, another great “tool recommendation” to ignore is the purposeful non-pagination of comments.

One of the simplest ways to increase page speed, reduce DOM nodes and improve a page’s bottom-line UX is to paginate comments.

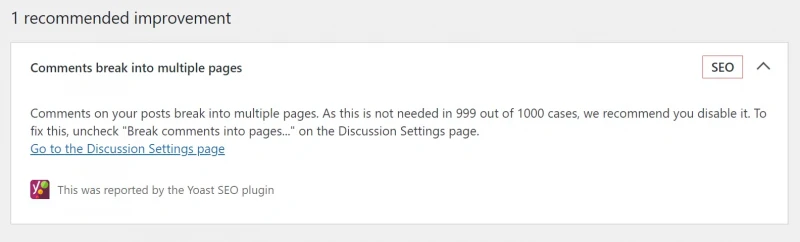

For years, the most popular SEO plugin on the planet, Yoast, provided a Site Health Warning that discouraged users from paginating comments.

Fortunately, after much back-and-forth on Github, this was resolved. You’ll still find this recommendation on many auditing tools and SEO plugins even though it’s against Google’s own pagination best practices.

It’s important to understand that the best tools have moved beyond antiquated lexical models like keyword density, word count, TF-IDF and basically “words” in general.

Semantic search has been the order of the day for years, and you should invest in tools that offer actionable insights through information retrieval science and natural language processing.

Think entities, tokens and vectors over keywords and strings. That’s the recipe for tool success.

How to use SEO tools correctly to get the most actionable insights

Using SEO tools can be a powerful part of your strategy, but it’s essential to use them wisely.

While they provide an incredible range of data, a tool’s recommendations aren’t always tailored to your specific goals, audience or site context.

Let’s look at some best practices for using SEO tools effectively, ensuring they serve your strategy rather than controlling it.

1. Interpret data with context

SEO tools work off their own metrics and internal algorithms, providing data points that can help guide strategy.

However, they lack the human understanding of what makes content genuinely valuable to readers.

When a tool suggests adding more keywords, for instance, think twice before keyword-stuffing – it may boost certain metrics, but it often sacrifices user experience.

Every piece of tool data should be taken as a starting point, not a final directive.

2. Use multiple tools for a rounded perspective

Relying on just one SEO tool can lead to a narrow or skewed view of your site’s performance.

Each tool has unique metrics and algorithms that emphasize different aspects of SEO, so combining insights from platforms like Google Search Console, Semrush and Ahrefs gives you a broader understanding.

Cross-referencing can provide a more balanced perspective, helping you make better-informed decisions.

3. Prioritize content quality above all

Many SEO tools focus heavily on technical metrics – heading structure, backlinks or schema deployment, for example.

While important, these shouldn’t overshadow your focus on quality content. A content-first approach remains at the heart of effective SEO.

Tools can help refine and enhance, but content that’s useful and engaging for your audience is what ultimately drives long-term success.

4. Keep your strategy aligned with updates

SEO is constantly changing, with Google’s algorithm updates reshaping best practices regularly.

Revisit and adjust your strategies to keep them aligned with the latest insights.

Tools also frequently update their metrics and algorithms, so it’s wise to monitor new features or recommendations that may add fresh value to your approach.

5. Prioritize user experience (UX)

SEO tools sometimes emphasize optimizations that may work well for search engines but less for real users.

For example, a tool may recommend pop-ups to capture leads, but if they interfere with usability, they can lead to high bounce rates and lower overall revenue.

Always put user experience at the forefront, focusing on aspects like site speed, mobile responsiveness and accessibility.

Tools can be a great help – when approached objectively

As I state regularly in audits, SEO is all about the little things.

For most sites, it’s never one issue identified by a tool that will control your future fortunes. It’s more of a death-by-a-thousand-cuts situation, causing sites to underperform.

Tools can provide insights, allowing you to best triage your site in these situations. But they should never be followed blindly. Unfortunately, many users (and SEOs) do just that!

In the end, SEO tools are best used when the user approaches them as “aids” rather than “solutions.”

Focus on weighing all tool recommendations to genuinely benefit your site audience, and the end result will always be a solid foundation on which to propel long-term growth.